Navigating the Maze: Charting a Course to Reduce Patient No-Shows

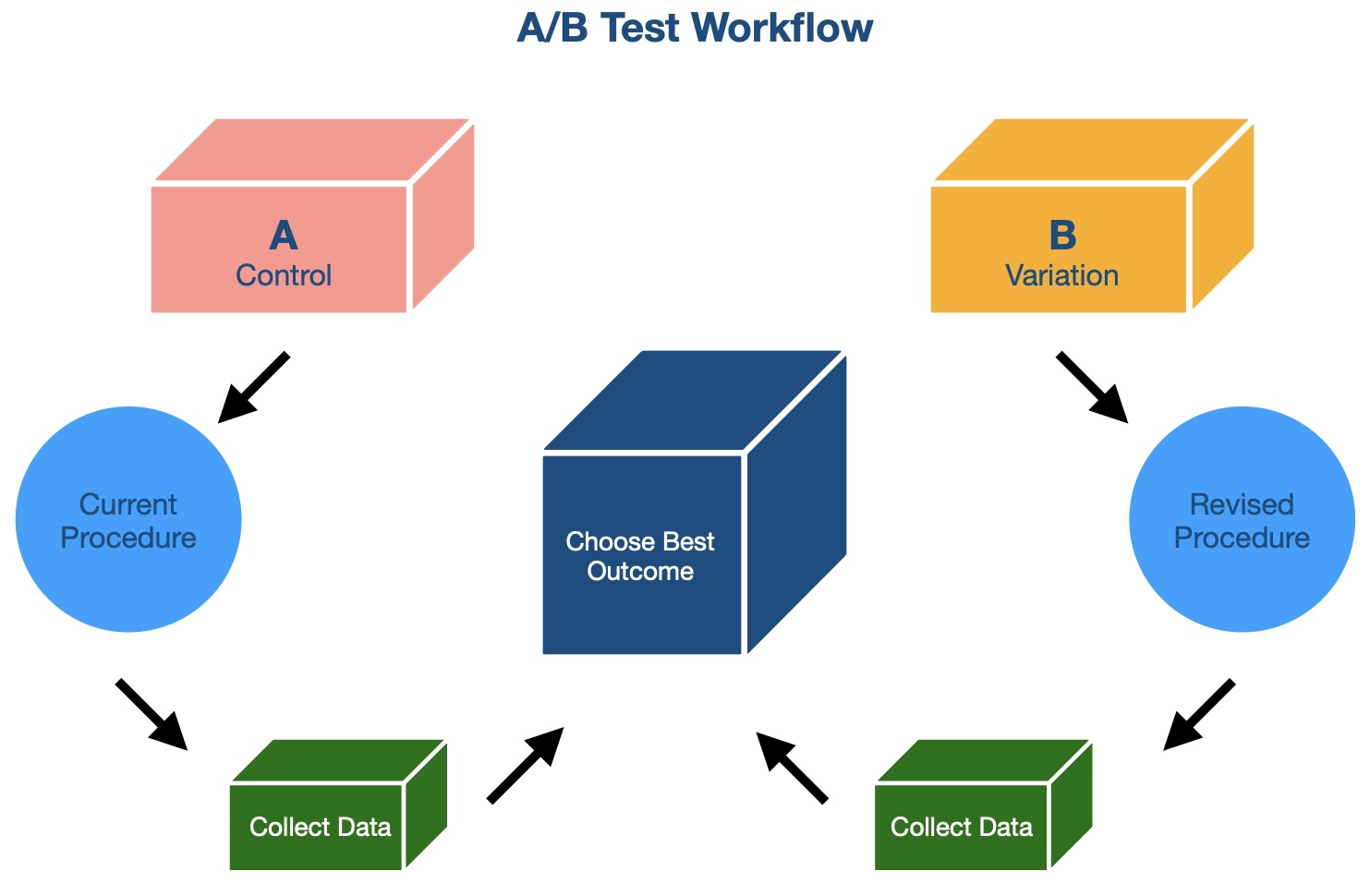

In the previous post, we explored why patient no-shows are a significant challenge for healthcare providers and introduced the concept of A/B testing as a potential solution. Now it’s time to dive into the details: How exactly do you design an A/B test to tackle a real-world problem like reducing no-shows?

In this post, we’ll walk through the process of designing our A/B test, from crafting a hypothesis to ensuring clean data and unbiased results. By the end, you’ll have a clear understanding of the steps involved in running a successful A/B test in healthcare—or any other field.

Step 1: Crafting a Hypothesis

Every A/B test starts with a question. For our test, the question was simple yet powerful:

Does the type of appointment reminder affect patient attendance?

From this question, I crafted the following hypothesis:

Hypothesis: Sending reminder phone calls or SMS messages will reduce no-show rates compared to email reminders.

This hypothesis provided a clear direction for the experiment and allowed us to define measurable outcomes.

Step 2: Preparing the Data

To ensure reliable results, data preparation was a critical step. Here’s what I did:

- Gathering Data: I collected appointment records, including patient demographics, appointment times, and reminder types.

- Filtering for Relevance: Only appointments with valid contact methods (email, phone number, SMS) were included in the study.

- Randomizing Groups: Patients were randomly assigned to one of three groups:

- Group A: Received email reminders.

- Group B: Received phone call reminders.

- Group C: Received SMS reminders.

By randomizing the groups, I minimized the risk of bias and ensured that each group was representative of the overall patient population.

Step 3: Running the Experiment

With the groups defined, I implemented the experiment:

- Reminder Methods: Each group received reminders through their assigned method.

- Observation Period: I monitored appointment attendance over several weeks to capture enough data for meaningful analysis.

- Recording Outcomes: For each appointment, I recorded whether the patient showed up (1) or missed the appointment (0).

This systematic approach ensured that I collected clean and actionable data.

Step 4: Ensuring a Fair Test

A/B tests can easily go wrong if not carefully designed. Here are a few ways I ensured fairness and accuracy:

- Consistent Conditions: All groups were treated equally, except for the type of reminder they received.

- Avoiding External Factors: The test period was chosen to avoid holidays or unusual clinic schedules that could skew results.

- Sample Size: I ensured that each group had a sufficient number of appointments to achieve statistically significant results.

Challenges We Faced

No experiment is without its hurdles. During the test, we encountered:

- Incomplete Data: Some patient records lacked valid contact information, requiring additional filtering.

- Behavioral Factors: External factors, such as weather or transportation issues, may have influenced no-shows independently of the reminder type.

While these challenges didn’t derail the test, they provided valuable lessons for future experiments.

Next in the Series

In the next post, we’ll reveal the results of this A/B test and what they tell us about reducing patient no-shows. Stay tuned to see which reminder type came out on top—and how these findings can inform better strategies for healthcare providers.